AI Agent Security: The Unspoken Prerequisite for Enterprise Adoption

In the race to deploy AI agents across enterprises, a critical element is consistently overlooked—comprehensive security. While organizations rush to implement these powerful automation tools, they’re often building on foundations of sand. The hard truth is that AI agent security isn’t just important; it’s the fundamental blocker preventing widespread, safe adoption in production environments.

The Growing Threat Landscape

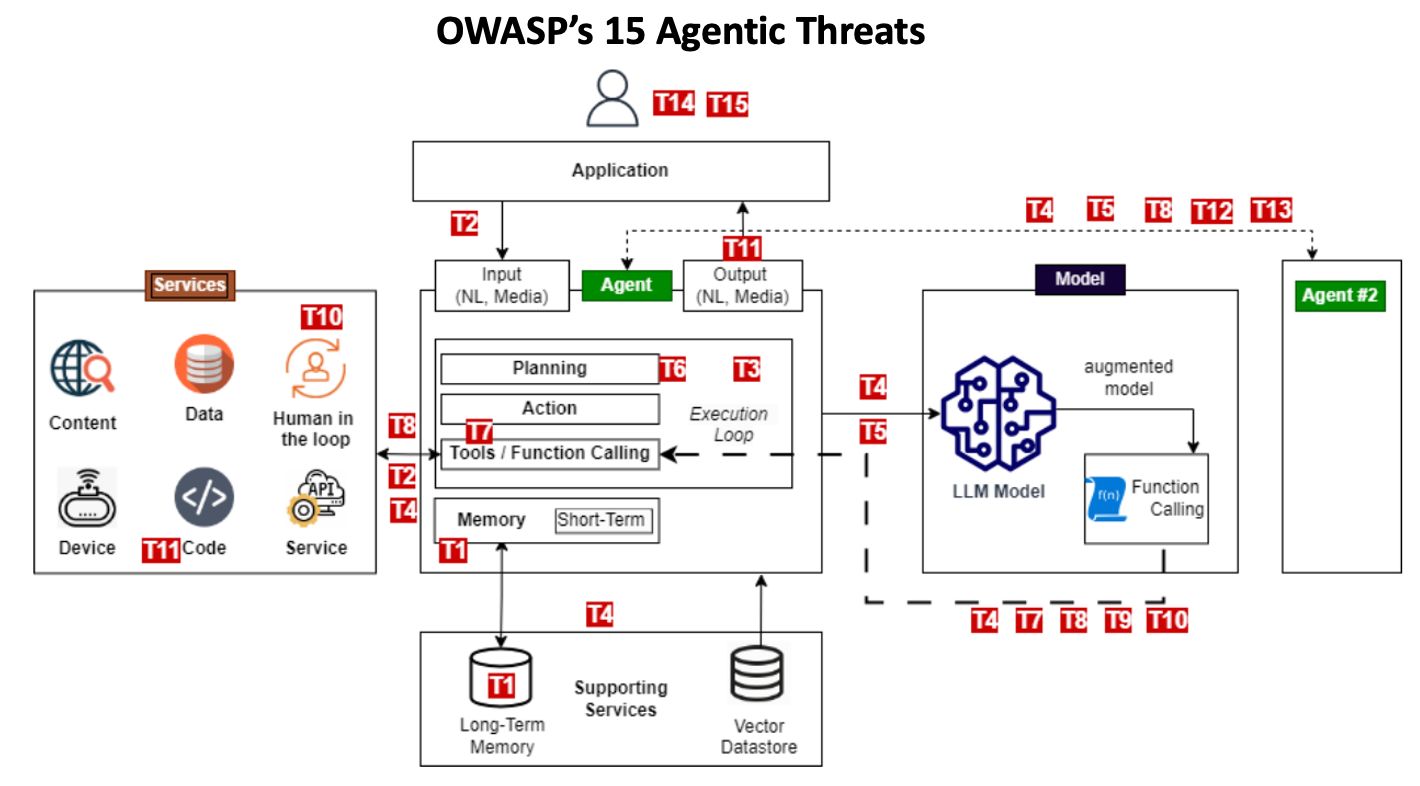

The OWASP Foundation, renowned for its work in web application security, has identified 15 distinct threats specific to AI agent systems. This taxonomy provides a sobering reality check for organizations excited about agentic AI’s transformative potential but blind to its unique security challenges.

These threats span the entire agent architecture—from the LLM models powering reasoning capabilities to the tools agents can access, and the memory systems storing sensitive context. Unlike traditional software, AI agents combine autonomous decision-making with powerful system access, creating novel attack vectors that conventional security approaches aren’t designed to address.

Src: https://genai.owasp.org/resource/agentic-ai-threats-and-mitigations/

Src: https://genai.owasp.org/resource/agentic-ai-threats-and-mitigations/

Four Critical Security Dimensions for AI Agents

1. Identity & Authentication

The foundation of AI agent security begins with robust identity. Without cryptographic identity verification, organizations face risks of agent impersonation and unauthorized privilege escalation. Strong identity models enable:

- Cryptographic verification of agent origins and permissions

- Role-based access controls limiting agent capabilities

- Transparent audit trails for all agent actions

- Prevention of identity spoofing attacks

2. Memory & Knowledge Integrity

AI agents rely on various memory systems—from short-term context to long-term vector stores. Protecting these knowledge repositories is essential:

- Content validation preventing poisoning of memory systems

- Session isolation containing potential compromises

- Verification of information sources before storage

- Protection against cascading hallucination attacks

3. Secure Tool Execution

The most powerful AI agents can execute code and access external tools—creating significant security implications:

- Sandboxed execution environments limiting potential damage

- Rate limiting and quota enforcement

- Detailed execution logs for security monitoring

- Prevention of unauthorized remote code execution

- Fine-grained permission controls for tool access

4. Multi-Agent Trust Frameworks

As organizations deploy multiple specialized agents, secure agent-to-agent communication becomes critical:

- Encrypted communications between agent systems

- Consensus verification for multi-agent decisions

- Authorization boundaries preventing cascade failures

- Protection against communication poisoning and MitM attacks

The C-Suite Imperative

What makes AI agent security particularly challenging is that it can’t be bolted on as an afterthought. The architectural decisions made during initial implementation will fundamentally determine whether an agent system can ever be made secure.

This reality demands that AI security must be a C-suite priority, not just an IT concern. Organizations where security teams are brought in late to “secure” already-deployed agent systems are setting themselves up for potentially catastrophic security failures.

Implementing a Defense-in-Depth Approach

Following OWASP’s agentic threat model, organizations need a comprehensive security strategy combining:

- Proactive measures: Security-by-design principles built into agent architectures

- Detective measures: Continuous monitoring for anomalous agent behavior

- Reactive measures: Containment strategies when threats are detected

Evaluating AI Agent Frameworks

When evaluating AI agent frameworks or building custom solutions, security professionals should immediately reject any system that:

- Doesn’t run agents in secure isolated containers

- Lacks a coherent identity model for agents

- Cannot enforce fine-grained permission boundaries

- Doesn’t provide comprehensive audit logging

- Allows unrestricted tool access

This extends to Machine Control Protocols (MCPs) as well, where secure design principles are equally critical.

Learning from Traditional Software Security

As noted by JPMorgan Chase CISO Pat Opet, the growing risks in software supply chains offer valuable lessons for AI security. Organizations must prioritize security over rushing features to market—a challenge particularly acute in the AI space where competitive pressures drive rapid deployment.

The Path Forward: Security by Default

For AI agents to achieve their transformative potential, comprehensive security must be built in or enabled by default. This means:

- Secure containment of execution environments

- Strong identity and access management

- Continuous validation of memory and knowledge stores

- Fine-grained tool permissioning

- End-to-end audit logging

Action for CISOs & CEOs

- Treat AI agent security as a strategic imperative before any production deployment

- Implement a defense-in-depth approach addressing all 15 threats identified by OWASP

- Establish governance frameworks specific to AI agent deployment

- Develop incident response procedures for AI-specific threats

- Invest in security training for AI engineering teams

The enthusiasm around AI agents is well-founded—these systems promise unprecedented automation capabilities. However, without addressing the fundamental security challenges they present, organizations risk building systems that are powerful but ultimately untrustworthy.

The organizations that will successfully harness AI agents won’t be those who deploy fastest, but those who deploy most securely.

The Reality of OWASP’s 15 Agentic Threats

The threat taxonomy displayed in the diagram represents a comprehensive mapping of attack vectors unique to AI agents. Let’s examine some of the most critical threats and their potential business impacts:

-

Memory Poisoning (T1): When an agent’s memory systems are compromised, attackers can inject malicious information that influences all future decisions. Imagine a financial agent making investment decisions based on deliberately falsified market data.

-

Tool Injection (T7): By manipulating tool selection or execution, attackers can force agents to perform unauthorized actions. This could lead to data exfiltration or privileged access to critical systems.

-

Human Manipulation (T10): Sophisticated social engineering targeting the human-agent interaction point can bypass security controls through authorized human intermediaries.

-

Model Manipulation (T5): When the underlying LLM is compromised, the agent’s entire decision-making process becomes suspect, potentially inserting backdoors across your enterprise systems.

Technical Implementation of Secure Agent Architecture

Building Robust Agent Identity

A proper agent identity system requires:

- Verifiable Credentials: Using PKI infrastructure to sign agent actions and verify origins

- Immutable Audit Chains: Establishing unalterable records of agent activities using append-only logs

- Dynamic Permission Boundaries: Adjusting agent capabilities based on execution context and risk profile

- Revocation Mechanisms: Enabling immediate termination of compromised agent credentials

Protecting Agent Memory Systems

Securing knowledge stores demands:

- Input Sanitization: Validating all information before it enters memory systems

- Provenance Tracking: Maintaining clear lineage of where information originated

- Isolation Boundaries: Preventing cross-contamination between agent sessions

- Consistency Checks: Detecting and correcting anomalous or contradictory information

Securing Tool Access

Tools give agents their power but create significant attack surfaces:

- Least Privilege Execution: Granting only the minimum permissions needed for each task

- Ephemeral Runtime Environments: Creating disposable execution contexts for each tool invocation

- Resource Quotas: Limiting computation, network access, and persistence capabilities

- Behavior Analysis: Identifying anomalous patterns in tool usage and execution paths

Industry-Specific Challenges

Financial Services

Financial institutions face unique requirements when deploying AI agents:

- Regulatory Compliance: Meeting sector-specific requirements like SOX, GLBA, and MiFID II

- Transaction Integrity: Ensuring non-repudiation for agent-initiated financial actions

- Risk Management Integration: Incorporating agent security into existing risk frameworks

Healthcare

For healthcare organizations, agent security intersects with patient safety:

- PHI Protection: Preventing unauthorized access to Protected Health Information

- Clinical Decision Safety: Ensuring agent recommendations can’t harm patients

- Integration with Existing Controls: Aligning with HIPAA and HITRUST frameworks

Emerging Standards and Frameworks

The industry is still developing comprehensive standards for AI agent security, but several promising initiatives are underway:

- NIST AI Risk Management Framework: Provides initial governance guidelines applicable to agent systems

- ISO/IEC JTC 1/SC 42: Developing standards specifically for AI security and trustworthiness

- Industry Consortiums: Organizations like the AI Security Alliance are developing best practices

The Path to Security Maturity

Organizations should approach AI agent security through a progressive maturity model:

- Foundational: Establishing basic identity, access controls, and sandboxing

- Managed: Implementing comprehensive monitoring and incident response

- Optimized: Deploying advanced threat prevention and automated security testing

- Innovative: Contributing to industry standards and pioneering new security approaches

Conclusion: The Business Imperative

The promise of AI agents is tremendous—from operational efficiency to new business capabilities. However, the path to realizing these benefits runs directly through security.

Organizations that treat agent security as an afterthought will inevitably face breaches, compromise, and potentially catastrophic failures. In contrast, those who build on secure foundations will be positioned to safely leverage these powerful technologies.

The question for executives isn’t whether to invest in AI agent security, but how quickly they can establish the necessary security foundations to enable safe adoption. In an environment where AI capabilities are evolving weekly, security must be the constant that enables rather than constrains innovation.

As OWASP’s comprehensive threat model demonstrates, the attack surface is complex and multi-faceted—but with proper architecture, governance, and security controls, organizations can navigate these challenges and unlock the transformative potential of trusted AI agents.

Saptak Sen

If you enjoyed this post, you should check out my book: Starting with Spark.